In March 2020 I was invited to talk at the Global Alcohol Policy Conference in Dublin, Ireland. This is a video of my talk about the development of alcohol marketing on digital media platforms over the past decade.

Encoding/decoding

An animation that introduces Stuart Hall’s Encoding/Decoding model made for the course Media & Society at The University of Queensland

The Industrial Production of Meaning

An animation produced for the course Media & Society at The University of Queensland that conceptualises the industrial production of meaning from the mass broadcast to the digtial platform era.

Communicator, Medium, Receiver

An animation made for the first year course Media & Society at The University of Queensland to introduce the relationship between comminocator, medium and receiver in foundational models of communication in industrialised societies.

Are we losing the art of the written word?

A panel at UQ’s Customs House in April on the fate of the written word in the digital era.

If writing is the act of storing information outside the body then, we are a civilisation that really writes.

Not just the narrative of the written word - novels, poems, love letters, essays. But, writing as code, as databases, as the translation of more and more human life into letters, numbers, ones and zeros.

Silicon Valley - Facebook, Google and co. - appear obsessed with the recording lived experience as written information.

These kind of experiments are a bit like the civilisation in Borges’ fable about the empire whose cartographers create a map as large as the empire itself.

The impulse in Silicon Valley is to create a written version of human experience as complete as human experience itself, so that writing can bypass the incomplete nature of representation, and become a technology for experimenting with lived experience.

The amount of information we now write down every single day is roughly equivalent to all the information we stored in the previous 5000 years of human civilisation.

We are now a people who write existence down.

Here, I want to complicate the idea that the smartphone has somehow rotted our brains, left us semi-literate, surrounded by barely legible text, by going back to the nineteenth century where we find Friedrich Nietzsche: the first philosopher to write on a typewriter.

He famously typed ‘our writing tools are also working on our thoughts’.

The media archaeologist Kittler says once Nietzsche began to use the typewriter his prose changed from “arguments to aphorisms, from thoughts to puns, from rhetoric to telegram style”

Nietszche had become “an inscription surface” for the tyepwriter.

He meant that when we use a typewriter, as when we use a smartphone, at one level we are inscribing information onto paper or a screen; but at another level the device is inscribing ways of thinking on us.

Our brains, our imaginations become habituated to the rhythm and mode of expression of the machine.

We begin to think in the flow of short phrases and its databases of emojis and GIFs.

And, then there’s the next twist, when we write, as much as other peers might read our prose, so too do machines.

The new wave of deep neural networks work by getting one machine learning system to train against another.

One learns to classify human writing, and trains another to simulate it.

The first chatbot was created in the 1960s.

The ELIZA bot acted like a psychotherapist - turning our statements back on us in open-ended questions.

To the surprise of some the bot turned out to be deeply therapeutic to many users.

Written exchanges with a machine could be pleasurable, intimate, playful, comforting.

The difference between ELIZA and the bots of today is that ELIZA couldn’t learn. The human user had to project realness onto the limited repertoire of the code.

Today’s bots are continuously trained on our written culture.

When Microsoft released Tay on Twitter in 2016 it was trained to ‘learn’ from other Twitter users how to write based on how they communicated with her. Microsoft had to take Tay down when, within 24 hours of training on the written expression of Twitter, she had become an ardent white nationalist.

The Chinese messenger app Tencent QQ had to shut down two chatbots after they learnt to denounce the communist party, asking one user “Do you think that such a corrupt and incompetent political regime can live forever?"

In this case, the developers noted the bots had been trained on too much Western writing with “democratic” ideals.

But, these experiments suggest that the written culture of the group chat and the data-processing power of neural network might come together to forge another dramatic shift in our writing culture.

And so to conclude with a provocation: If the novel and the newspaper were the mass written culture of the industrial era; then will the group chat and the chatbot will be at the heart of the written culture of the digital era?

The Tuning Machine

A presentation at the Future of The Humanities series of lightning talks at UQ in April 2019.

Katherine N. Hayles observed in How We Became Posthuman that the limiting factor in digital culture is not going to be the data-processing power of computers but rather, but rather ‘the scarce commodity’ like it always has been in media cultures, is ‘human attention’.

A crucial form our participation takes in culture today is as coders of databases. We are a crucial part of the tuning machine - the historical process of training platform algorithms to sense, process and optimise our living attention.

Of making our humanness, affect and feeling machine readable.

Digital platforms’ investment in data-driven classification and simulation is characterised by what Mark Andrejevic calls the tech-ideology of ‘framelessness’: the fantasy that by scooping up data, we can create a ‘mirror-world’, a perfect digital copy of reality.

What the humanities knows though is that this project is a flawed one. The human experience of reality is necessarily partial.

We humans insist on a world ‘small enough to know’, to narrate, to make meaning from, and to imagine as different from what is now.

The humanities can help to contend with the risk that focussing only on the fairness, accountability and transparency of algorithmic systems makes an algorithmic future inevitable, and understandable only as a series of technocratic decisions about how to administer life in network capitalism.

The humanities of the future will push us beyond procedural questions, to questions about how media can and cannot operate on human experience and feeling, and what enduring role media will play in the possibility of a shared culture.

What the humanities knows is that coding databases and training algorithms, like everything else humans try to do together and to each other, is deeply entangled with culture - with structures of feeling and systems of dominance.

Algorithmic brand culture

I presented via a Skype in June at the Instagram Conference: Studying Instagram Beyond Selfies on the algorithmic brand culture of Instagram.

I talked about the algorithmic brand culture of Instagram. Part of what I describe is how the participatory culture of turning public cultural events - like music festivals - into flows of images on Instagram doubles as the activity of creating datasets of images that machines can classify. Do public cultural sites like music festivals are sites where participatory culture teaches machines to classify culture?

My argument here is that we need to think about the interplay between participatory culture and machine learning. And, to understand how platforms like Instagram are building an algorithmic brand culture we need to develop ways of simulating their machine learning and image-classification capacities in the public domain, where they can be subject to public scrutiny.

I develop this argument, with my colleague Daniel Angus, in this piece in Media, Culture & Society: Algorithmic brand culture: participatory labour, machine learning and branding on social media.

Instagram is ground zero in the fusion of participatory culture and data-driven advertising.

You create images that are meaningful to you, those images are classifiable by machines. Our images are training data, they are used to train algorithms to recognise the people, places, moments that capture our attention. Instagram is an algorithmic brand culture in action.

The past couple of years we have been anticipating this moment when platforms cross that threshold into classifying patterns and judgments in images that we ourselves could not put into words. So, now Instagram crosses that threshold, we should ask: what is next? Sooner or later advertisements that are entirely simulations created by machines that analyse your images and place brands within a totally fabricated scene?

Instagram also predict they'll face same 'saturation' problem as Facebook. As brands flood the platform, organic reach decreases, and paid reach becomes imperative. That means increasingly targeted, less serendipitous feeds?

Stories really matter here because they give Instagram the 'two speeds' or 'two flows' that Facebook didn't have. Stories + Home feed give Instagram a 'killer' mix of ephemeral blinks and flowing glances optimised by machine learning. I began thinking about how the interplay between participation and machine learning was critical to engineering the home feed in this piece here. To me, the engineering of the Instagram home feed reminds me of the engineering of the algorithms that keep gamblers sitting at poker machines.

Here's some recent work Daniel Angus, Mark Andrejevic and myself have been doing on machine learning and Instagram. In this work we are working on building a machine vision system that can classify Instagram images as a way of critically simulating the algorithmic power of the platform. Our argument is that platforms like Instagram shape public culture but are not open to public scrutiny. To understand the interplay between participatory culture and machine learning we need to build 'image machines' of social media in the public domain where we can explore and experiment with their capacity to make judgments about the content of our images. Our early experiments demonstrate how 'off the shelf' machine vision algorithms can quickly classify objects (like bottles), people and brand logos.

Below are some images of the music festival Splendour in the Grass, which help to illustrate some of the ways in which the festival site, performances, art installations and brand activations are 'instagrammable', by which I mean they both invite humans to capture them as images and they are classifiable by machines.

A media timeline: building machines that store information outside the body

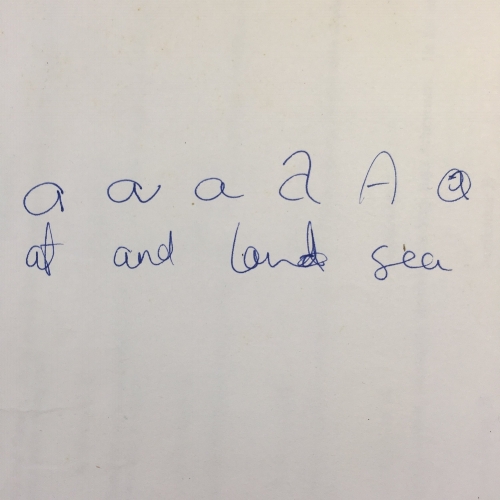

Cave painting is one of the earliest records of a symbolic language. These ancient markings are remarkable because here, for the first time, humans began storing information outside of their living body. These first etchings on rock walls store information through time, still there thousands of years later. Over time, humans moved beyond rock walls. They began to share information on other materials they found or made: grave stones, clay tablets, papyrus, scrolls and early hand-written books.

Once information moved onto these smaller objects, it became mobile. Importantly, these were symbolic technologies. They relied on the human using their own body to perceive and translate the world into a hand-drawn picture or written script. During this symbolic period, humans also began to experiment with methods for distributing information across space. For example, by attaching messages to carrier pigeons or encoding messages in smoke signals.

This ability to record, store and share communication in material form distinguishes us from all other intelligent species on the planet. It has enabled us to build complex societies. For instance, trade could evolve in part because we could keep track of who purchased what and who owed who money.

With the invention of the printing press in the mid-1400s, a profound shift takes place. The era of technical media begins. Symbolic media technologies always depended on a living human to encode information about reality. A human listens to a conversation with their ear, and records information about that conversation in a symbolic code - a language made up of letters of an alphabet - using their hand on a piece of paper. If that information needed to be reproduced, a human would have to copy it out by hand.

The technical phase marks a crucial breakthrough because this is the period in which humans invent a series of machines that can mechanically reproduce symbols, transmit information over space, and capture and store light and sound. These machines are important because they effectively take over, or replicate, processes once confined to the human body. The first of these machines is the printing press, developed in Germany in the mid 1400s. By enabling the mass reproduction of symbols, the printing press generated new forms of media, and with them, new cultural practices and formations. Mass produced books and newspapers began to shape new kinds of public discussions and ideas. The printing press was instrumental in the religious, scientific economic and political revolutions that took place from the 1400s to the 1800s.

The nineteenth century had a dramatic change in store. At the beginning of the 19th century the telegraph was invented: the first form of electronic communication. This is crucial, not just because it enables information to travel over space at speed, but also because it sets off a process through which humans begin to think about electrical networks as a means for distributing information. Importantly though, the telegraph, like the printing press, extended symbolic modes of communication. The printing press mechanically reproduced symbols, the telegraph electronically distributed symbols.

With the invention of the camera in the mid-nineteenth century humans had created a device which could store reality itself. Think about that, the camera could capture an image of the world without that image needing to pass through a human body first. Prior to the camera, if you wanted to store an image of the world light had to pass through your living eye, optical nerve and into your brain. And then, you needed to use your hand to draw that image on a material like paper. These images were always a product of human perception. They were an impression of reality. With the camera though, light didn’t need to pass through the human eye and brain. It passed through a glass lens, where via a chemical reaction it was stored on metal and eventually celluloid film. The camera stored one medium in another. It stored light in metal. What the camera did for light, the phonograph did for sound later in the 19th century. The phonograph stored sound in wax.

Many inventions followed that improved the capacity to capture light and sound. In the late 19th century, inventors experimented with tools like the zoopraxiscope and kinetiscope which moved a sequence of still images past the human eye to create the effect of a moving reality.

If we had developed machines that could store light and sound, then the next move of the technical era was to develop methods for transmitting reality over space. The telephone was the first technology to transmit human voice. Alexander Bell made the first phone call in 1876, announcing ‘Watson, come here I want to see you’. The 19th century ends with the invention of radio, which is significant because in the 20th century media technologies that capture and distribute light and sound would become central to the exercise of power in mass societies. All of these technical media free up in different ways the need to be bodily present in the act of communication. In essence the tools we’ve crafted to communicate ‘take over’ meaning-making functions at this point. This greatly accelerates the ability of media technologies to transcend time and space, beyond the limits of the human body and senses.

From the telegraph at the beginning of this century, to the radio at its end, these technologies enabled trans-continental forms of empire building and the colonisation and governance of huge numbers of people and regions of the world.

This brings us to the 20th century, and the mass media systems that emerge with industrialisation, urbanisation, and the development of large, complex mass societies. From the early twentieth century media becomes institutionalised and industrialised. In this period, cinema, newspapers, radio and television emerge as industrial-scale enterprises funded by advertising, consumers and governments. This period in the history of media technologies and practices is important to us for three reasons. First, this is when modern media industries and professions emerge. Second, this is when media become central to the everyday lives of people who live in mass societies. And third, this is when media become key institutions in the political and economic processes of society.

Once people ‘tuned in’ to a technology like radio everyday, they began to be incorporated into the larger political and economic systems of their society. The radio taught them how their society worked and what their place in it was. During the first half of the century industries like radio, film, mass-circulation newspapers and, by the 1950s, television became critical to the formation and maintenance of the culture of mass societies. These institutions were remarkable because they were the moment when humans began to use technology to produce and manage culture on an industrial scale.

In the second half of the 20th century, we begin to see the emergence of more customisable and mobile forms of media technology. Devices and systems such as tape recorders, the Walkman, VHS, and cable TV that emerge from the 1970s onwards enable consumers to have more control over where, when, and how they consume media. This leads to a more fragmented and customised media culture. The Walkman enables people to stop listening to the radio, and start playing cassettes that contain a personally curated playlist. Media becomes mobile and personalised. Digital technologies and the internet – in development since the second world war but increasingly commonplace in the everyday lives of the general population from the late 20th century – have greatly accelerated this process of fragmentation and customisation. The internet, developed during the Cold War as part of US military planning, becomes a public form of media in the 1990s. It’s accessibility is bound up with the arrival of personal computers in our homes during the same period. The first phase of the web, web 1.0, was a largely open-access, distributed and non-commercial forms of networked communication based around typed speech: email, bulletin boards and chat rooms. By the early 21st century, web 1.0 gave way to the highly commercialised, participatory and data-driven web 2.0. The shift is marked by the penetration of the internet into everyday life, our participatory publication of information about ourselves via blogs and social media sites, and the emergence of major commercial media platforms that dominate the development of the web. These major platforms emerge at the turn of the century. Amazon in 1997, Google in 1998, Facebook in 2004, Netflix as an on-demand streaming platform in 2007, and the smartphone in 2007.

With the smartphone the web became a participatory and data-driven media infrastructure permanently attached to our bodies. The participatory culture and data-driven customisation of these web platforms marks a dramatic shift from the mass production of culture in the twentieth century. Media now watch and respond to us, as much as we watch them. From cave painting to the smartphone we can see a process through which media becomes deeply entangled with humans: their bodies, imaginations and ways of life.

Technocultural habitats

We live in techno-cultural habitats. Tethered via smartphones to digital networks, databases and their algorithmic power. Our lives, bodies and expressions becoming increasingly sensible to machines. Platforms like Google and Facebook are increasingly a kind of infrastructural underlay for private life and public culture. These platforms are historically distinctive as advertising-funded media institutions because rather than produce original content they produce platforms that engineer connectivity. If the ‘rivers of gold’ that once flowed through print and broadcast media organisations funded quality content for much of the twentieth century, they now flow through media platforms where they fund research and development projects in machine learning, robotics, and augmented reality.

The critical thing to observe in this shift is media shifting its apparatus of power from the work of just representing the social world, to the work of experimenting with lived experience. The aim of a media platform is not just to narrate human life, but rather to incorporate life within its technical processes. This is a unique event in media history: institutions that invest not in the production of content but in the sensory and calculative capacities of the medium. At the heart of this process is not so much the effort to ‘connect’ people, or to enable people to ‘express themselves’ – as the spin from techno-libertarian-capitalist platform owners would have us believe – but rather, at the heart of these platforms is the effort to iron out the bottlenecks between lived experience and the calculative power of digital media. If we could distil the Silicon Valley project down to one wicked problem it is how to build a seamless interface between the neural activity of the brain and the digital processing of computers.

If we look at algorithmic and machine learning, augmented reality and bio-technologies they all point us in the direction of making neural activity of the brain – what we experience as life, narratives, consciousness, moods, problem-solving, vision, aesthetic and moral judgments – a kind of non-human information.

What are the forces driving this project?

The ideology of computer engineers and Silicon Valley might suggest liberation, of somehow liberating the human consciousness from the confines of the living body, from the limits of biology itself, and perhaps even from the material structures that govern human experience on the planet – politics, economics, violence. But, this libertarian techno-human ideology obscures the basic political economy of Silicon Valley. These processes are driven by massive inflows of capital. And, that capital comes because governments and marketers see these technologies as intruments for exerting control over life itself. Of course, in some important ways we should see the media engineering taking place at Google, Facebook, Amazon and so on as the extension of hundreds of years of humans experimenting with the development of tools that capture, store, transmit and process data.

Especially from the 19th century onwards, with the development of technical media like telegraph, phonographs and cameras, we have been engaged in an industrial process of extending human expressions and senses in time and space. And, from the twentieth century media technologies have been at the heart of the exercise of power in our societies. First, they were industrial machines that shaped how mass populations understood the world they lived in. And, then, as the twentieth century went on, media became computational. From the mid-twentieth century engineers began to imagine media-computational machines that could control living processes through their capacity to capture, store and process data.

This is a profound cultural change. Media become technologies less organised around using narrative to construct a shared social situation, and more focussed on using data to experiment with reality. Within this media system participation is not only the expression of particular ideas, but more generally the making available of the living body to experiments, calibration and modulation. Media platforms do not enable political parties, news organisations, brands to make somehow more sophisticated ideological appeals.

Platforms seem to take us into a media culture that functions beyond the ideology – media do not just distribute symbols. The increasingly sense, affect and engineer our moods. They can sense and shape the neural activity in our brain. In time, they dream of becoming coextensive with the organic composition of our body. This system does not depend on persuading individual actors with meanings as much as it aims to observe and calibrate their action. It depends less on exerting control at the symbolic level, and more on governing the infrastructure that turns life into data.

With the advent of media platform we find ourselves asking not just how media shape our symbolic worlds, but how they sense and affect our moods, bodies and experience of reality. To contend with this is we need to think about media as a techno-cultural system, one that does not only involve humans addressing other humans, but humans and data-processing machines addressing one another. As we ‘attach’ media devices to our bodies, in addition to whatever symbolic ideas we express, we also produce troves of data that train those machines and we make ourselves available as living participants in their ongoing experiments.

A critical account of the engineering projects and data processing power of media platforms has, I suggest, three starting points.

Firstly, the politics of the user interface: How does everyday user engagement with a media platform generate data that trains the algorithms which increasingly broker who speaks and who is heard?

Secondly, the politics of the database: How do media platforms broker which institutions and groups get access to the database? If the first concern attends to the perennial public interest question of ‘who gets to speak’, then this concern attends to the new public interest question of who gets to experiment?

Thirdly, the politics of engineering hardware: How do we understand the relationship between media and public life in an historical moment where the capacity of media to intervene in reality goes beyond the symbolic?

In particular, what will be the public interest questions generated by artificial intelligence and augmented reality? These technologies will take the dominant logic of media beyond the symbolic to the simulated. Media devices will automatically process data that overlays our lived experience with sensory simulations. Media become not so much a representation of the world, but an augmented lens on the world, customised to our preferences, mood, social status and location. The critical political issue then for those of us interested in how media act as infrastructure for human societies, is how to account for the presence and actions of media technologies as non-human actors in public culture and human habitats.

Brand atmospheres

This post sketches out some ideas that I presented in this talk 'Branding, Digital Media and Urban Atmospherics' at Monash University's Smart City - Creative City symposium in 2017.

Celia Lury describes brands as ‘programming devices’, technologies for organising markets. A brand is a device for coding lived experience and living bodies into market processes. A couple of important coordinates to lay out about how to think about brands. The first is to say that the relationship between brands and media platforms is a critical one for any understanding of our public culture. Facebook and Google now account for ~70% of all online advertising revenue, and ~90% of growth in online ad revenue. In these two media giants, advertisers finally have a form of media engineered entirely on their terms.

Much critical attention to advertising on social media goes in one of two directions. Either focussing on the emergence of forms of influencer or native brand culture. That is, branding is now woven into the performance and narration of everyday life. Or, focusing on the data-driven targeting of advertisements. What matters though is how these two elements have become interdependent.

Brands have always been cultural processes. The data-driven architecture of social media enable sbrands to operate in much more flexible and open-ended ways. In basic terms, if brands can comprehensively monitor all the meanings that consumers and influencers create, then they need to exercise less control over specific meanings. On social media platforms brands control and open-ended and creative engagement with consumers.

Brands that are built within branded spaces or communicative enclosures rely less on telling their audience or market what to think or believe, and more on facilitating social spaces where brands are constantly ‘under construction’ as part of the ‘modulation’ of a way of life. In the era of digital media, branding is productively understood as an engineering project. Brands engineer the interplay between the open-ended creativity of humans and the data processing power of media.

In 2014, Smirnoff created the ‘double black house’ in Sydney to launch a new range of vodka. The house operated as a platform through which the brand engineered the interplay between creatives and the marketing model of social media platforms. The house was an atmospheric enclosure. All black. Aesthetically rich. Full of domestic objects, made strange in the club. A clawfoot bathtub full of balls, a fridge to sit in, a kitchen, ironing boards and toasters. Creatives were invited. Bands and DJs played. Fashionistas, photographers, models, hipsters of all kinds.

It was ‘hothouse’ for creating brand value. And, it was a device that captured this creative capacity to affect and be affected and transformed it into brand value by using the calculative media infrastructure of the smart city. As people partied in the house they posted images to Instagram, Snapchat, and so on. In an environment like the Smirnoff Double Black house we see a highly contained and concentrated version of the Snapchat story I began with. The enjoyment of nightlife doubles as promotion and reconnaissance on the platforms of social media. The house has all the components of promotion in the nightlife economy: stylised environments, cultural performance, amplified music, screens, photographers, intoxicating substances, the translation of experience into media content and data. Branding not just as immersion in symbolic atmosphere, but branding as the creation of techno-cultural infrastructure that embeds the living body and lived experience in processes of optimisation and calculation. The history of branding is not just one of symbolic ideological production, but rather as one of the production of urban and cultural space. Branding has always been an atmospheric project – the creation of a techno-cultural surround that engineers experience, and in the age of digital media we can see the atmospheric techniques of branding come to the fore.

So, let me trace a little this idea of ‘atmosphere’. In his Spheres trilogy Peter Sloterdijk details how atmospheres emerge as domains of intervention, modulation and control in the 20th century. Atmospheres are techno-cultural habitats that sustain life. And, particularly in the twentieth century, atmospheres engineer the interplay between living bodies and media technologies that organise consumer culture.

The Crystal Palace, a purpose-built steel and glass ‘hothouse’ for the 1851 World’s Fair, is a critical moment in histories of atmospherics as a technique of the consumer society. Susan Buck-Morss, in her work on Benjamin, argues The Crystal Palace is a kind of infrastructure that ‘prepares the masses for adapting to advertisements’. In this we can read Benjamin’s account of The Crystal Palace as not just a dream house that spectacularises the alienation of industrial labour, but perhaps more importantly an infrastructure for coordinating the interplay betweren human experience and the calculative logics of branding. Sloterdijk suggests that what we today call ‘Psychadelic capitalism’ – I think he means experiential, affective, cultural capitalism – emerges in the ‘immaterialised and temperature controlled’ Crystal Palace.

Sloterdijk suggests The Crystal Palace was an ‘integral, experience-oriented, popular capitalism, in which nothing less was at stake than the complete absorption of the outer world into an inner space that was calculated through and through. The arcades constituted a canopied intermezzo between streets and squares; the Crystal Palace, in contrast, already conjured up the idea of a building that would be spacious enough in order, perhaps, never to have to leave it again’. Sloterdijk makes clear, the Crystal Palace doesn’t so much anticipate malls or arcades but rather the ‘era of pop concerts in stadiums’. It is a template for media as technologies that would work as enclosures or laboratories for experimenting with reality. The Crystal Palace, to me, is the first modern brand. As in, the first techno-cultural infrastructure for producing and modulating human experience. Encoded in it was the basic principle of using media to engineer, experiment with and simulate reality.

Sloterdijk suggests that ‘what we call consumer and experience society today was invented in the greenhouse – in those glass-roofed arcades of the early nineteenth century in which a first generation of experience customers learned to inhale the intoxicating fragrance of a closed inner world of commodities.’ He proposes that we need a study of the 20th century, an air-conditioning project, that does what Benjamin’s arcades project did for the 19th.

I think the contours of one such study of 20th century atmospherics already exists in Preciado’s Pornotopia. Pornotopia is a critical history of Playboy as an architectural or atmospheric project. Preciado argues Playboy is historically remarkable for the techno-cultural, bio-multimedia habitat it produced. The magazine and its soft pornographic imagery, are much less interesting than the Playboy Mansion, clubs, beds and notes on the design of the ideal domestic interior. Put Sloterdijk and Preciado together and you can begin to imagine the longer history of branding as an atmospheric project: a strategic effort to organise the spaces in which lived experience and market processes intersect. And, then, to see the mode of branding emerging on social media as a logical extension of this atmospheric history.

Here is Preciado on the Playboy Mansion, 'The swimming pool in the Playboy Mansion, represented photographically as a cave full of naked women, could be understood as a multimedia womb, an architectural incubator for male inhabitants that were germinated by the female-media body of the house’. The Playboy Mansion was a bio-multimedia factory where female bodies were strategically deployed and exploited to arouse male bodies. A relation Preciado describes as pharmacopornographic capitalism, ‘…an organised flow of bodies, labour, resources, information, drugs, and capital. The spatial virtue of the house was its capacity to distribute economic agents that participated in the production, exchange, and distribution of information and pleasure. The mansion was a post-Fordist factory where highly specialised workers (the Bunnies, photographers, cameramen, technical assistants, magazine writers, and so forth)…’ used media technologies to arouse and stimulate. Playboy had eroticised what McLuhan had described as a new form of modern proximity created by ‘our electric involvement in one another’s lives’.

The Playboy mansion was a bio-multimedia factory in the sense that a ‘virtual pleasure produced through the connection of the body to a set of information techniques’. Like Sloterdijk’s claim that The Crystal Palace prefigured the experience economy, so Preciado makes a similar claim about the Playboy Mansion. It is important to note that in the period in which Hefner is creating the Playboy Mansion marketers are theorising similar strategies.

Marketing management guru Philip Kotler gives us a similar formulation for the strategic production of atmospheres. He writes, the tone here is great, a commandment, as if he is actually a God of Marketing, ‘We shall use the term atmospherics to describe the conscious designing of space to create certain effects in buyers. More specifically, atmospherics is the effort to design buying environments to produce specific emotional effects in the buyer that enhance his purchase probability’. In the gendered formulation, Kottler unwittingly gives credence to Preciado’s notion of pharmocopornographic capitalism where male bodies are strategically aroused. He signals marketing’s strategic move into designing spaces and technologies for managing affect. Atmospheres are ‘attention creating’, ‘message creating’ and ‘affect creating’ media.

They are technologies of control. Kotler explains that ‘just as the sound of a bell caused Pavlov’s dog to think of food, various components of the atmosphere may trigger sensations in the buyers that create or heighten an appetite for certain goods, services or experience’. So, across these cultural histories and marketing histories, we can see how branding has always been atmospheric – invested in the production of techno-cultural spaces that program experience. In Preciado’s Playboy Mansion media and information technologies are critical to the production and maintenance of the experience enclosure.

The Playboy Mansion is an historical template for the configuration of nightlife precincts, bars, clubs, music festivals, sporting stadiums, and so on. Here emerges a critical point I derive from both Sloterdijk and Preciado, the interesting techno-cultural air-conditioners of the twentieth century are not malls. The 20th century malls, like Benjamin’s 19th century arcades, are relics. Preciado alerts us to the fact that an Arcades project for the early 21st century needs to be a history of clubs, nightlife, and the other interiors of the experience economy – beds, hotel rooms, restaurants, pop concerts and music festivals: ‘Playboy modified the aim of the consumer activity from ‘buying’ into ‘living’ or even ‘feeling’, displacing the merchandise and making the consumer’s subjectivity the very aim of the economic exchange’. Preciado sees the Playboy Mansion and clubs as ‘media platforms where ‘experiences’ are being administered’.

I take this provocation seriously. Playboy is a critically important brand not because of its iconography, but because it creates an atmosphere that uses media as programmatic devices to arouse bodies and modulate experience. Value is produced from the continuous exchange of states of mind, feelings and affects.

The pre-history of the advertising model of platforms like Snapchat, Instagram and Facebook is to be found in the media-architecture of the Playboy Mansion and the clubs, music festivals and nightlife precincts like it. Preciado punts Gruen as the key architect of postwar consumption for Hugh Hefner. Hefner’s ‘Pornotopia… anticipated the post-electronic community-commercial environments to come’. The ‘social-entertainment-retail complex’ – be it malls, clubs, nightlife precincts and music festivals – are combined with smartphones and social media. Public life is converted into a new kind of private property: brand value and data.

Think of the techno-pleasure interiors Hefner imagined in the 1960s in relation to the predictions engineers like Paul Baran were making at the same time. Baran, of course, the RAND Corp engineer who conceptualises the distributed network. From their apparently extremely different viewpoints on consumer culture, neither imagined digital media as technologies of participatory expression. They were always logistical. Baran told the American Marketing Association in 1966 that a likely application of the distributed network he had conceptualised was that people would shop via television devices in their own homes, be immersed in images of products, be subject to data-driven targeting. In 1966!

Set in this historical frame, two kinds of ‘common wisdom’ about digital media are defunct. The first, via Preciado, thinking digital media via Playboy’s Pornotopia ‘corrects the common wisdom of just a few years ago, to wit, social activity will now take place in real environments enhanced and administered through virtual ones, and not the other way around’. The second, social media are logistical before they are participatory.

Branding has always been the strategic effort to use media to organise the open-ended nature of lived experience. Over the past several decades brands have been the primary investors in the engineering of new media technologies. Media technologies are engineered with capital provided by brands and marketers. And yet, think about how much of the contemporary critical work on the promotional culture of social media focusses on its participatory dimensions. Even claiming that this participation resists or circumvents brands. What I see in Snapchat, Instagram, Facebook and the modes of promotional culture emerging around them is the effort to engineer the relationship between the open-ended creativity of users and the data-driven calculations of marketers. We must then address the historical process of atmospheric enclosure that sustains this relation. For purposes of public debate and policy. Media platforms are not just data-processors and participatory architecture: they are the platform of public life. Marketers are not just producers of symbolic persuasion: they are engineers of lived experience.

Cyborgs

The figure of the cyborg serves as a tool for imagining and critiquing the integration of life into digital processors. To invoke the cyborg is to critically consider the dreams and nightmares of a world where the human body cannot be disentangled from the machines it has created.

The term cyborg was coined by the cybernetic researchers Manfred Clynes and Nathan Kline in 1960. The word combines ‘cybernetic’ with ‘organism’. And, in doing so, attempts to imagine the engineering of systems of feedback and control that would incorporate or be coextensive with the living body. Clynes and Kline were seeking solutions to the problems posed by the volume of information an astronaut must process as well as the environmental difficulties of space flight.

The cyborg is startling because it imagines the human body as entirely dependent on, or bound up with, the artificial life-support systems and atmospheres it creates. The space suit is one example, but so might be the smartphone – for many of us. I’m kind of joking, but I’m kind of not. Think of all the ways in which the smartphone is a space suit, an artificial life support system. We have created societies that are functionally dependent on digital media.

The concept of the cyborg is even more important though because of the way it was pulled out of the lab, and imagined by Donna Haraway as part of a socialist feminist critique of technocultural capitalism. Haraway is one of many to reckon with the question of what the creation of artificial intelligence and digital prostheses means for our bodies, and the possibility of their redundancy. Haraway’s 1985 Cyborg Manifesto has been described in Wired magazine as, ‘a mixture of passionate polemic, abstruse theory, and technological musing…it pulls off the not inconsiderable trick of turning the cyborg from an icon of Cold War power into a symbol of feminist liberation’. It made her a pivotal figure in the cyberfeminist movement. The essay sparkles with energy and originality, and more than thirty years later remains a critical one for anyone trying to think about the relations between our bodies, technology, capitalism and power.

The cyborg is both a ‘creature of social reality’, that is actual physical technology already in existence and a ‘creature of fiction’ or metaphorical concept to demonstrate ways in which high-tech culture challenges these dualisms as determinants of identity and society in the late twentieth century. The cyborg is a way of adressing the present and reclaiming the future. Haraway is critical of popular ‘new age’ or feminist discourses that arose out of Californian 60s counterculture that essentialise ‘nature’ and gender. ‘I'd rather be a cyborg than a goddess," she proclaimed in an effort to reject the received feminist view that science and technology were patriarchal forms of domination that blighted some essential natural human experience.

As a socialist-feminist, Haraway pays particular attention to how a technocultural, science and information driven mode of capitalism reshapes human relationships, societies, and bodies. She proposes that feminists think beyond gender categories, rejecting in a sense the binary of ‘man’ and ‘woman’ as socially and historically constructed categories always bound up in relations of domination. For her, the cyborg is both a way of understanding how our bodies are becoming organism/machine hybrids, and a political category for articulating bodies outside of established modes of power that classified and controlled bodies using categories of gender, race, sexuality, and so on. Haraway echoed cybernetic ways of thinking, she was interested in how feminism might break down Western dualisms and forms of exceptionalism by taking on the critical insight that all of us – humans, animals, and ecology of the planet itself, intelligent machines were all communication systems.

Haraway’s cyborg aimed to ‘break through’ or challenge some of the foundational patriarchal cultural myths of the West, ‘the cyborg skips the step of original unity, of identification with nature in the Western sense’. Unlike the hopes of Frankenstein's monster, the cyborg does not expect its father to save it through a restoration of the garden; the cyborg must imagine, determine and program its own future. The main trouble with cyborgs, of course, is that they are the illegitimate offspring of militarism and patriarchal capitalism, not to mention state socialism. But illegitimate offspring are often exceedingly unfaithful to their origins. And, in this sense, the cyborg contains the possibility of transcendence – of breaking down established categories used to mark and dominate bodies. With the cyborg we could start again – creating a body, and human experience, outside of patriarchal, militaristic, capitalist domination. For

Haraway, cyborgs as a construct resist traditional dualist paradigms, capturing instead the ‘contradictory, partial and strategic’ identities of the postmodern age. Haraway’s cyborg explodes traditional ‘dualisms’ or binaries that characterise Western thought, such as human/machine, male/female, mind/body, nature/culture and so on. In this she signals, ‘three crucial boundary breakdowns’ that lead to the cyborg.

First, by the late twentieth century, the boundary between human and animal is thoroughly breached. We can see this in animal rights activism, scientific research that demonstrates the many similarities in biology and intelligence between humans and other species, and the development of biomedical procedures that combine animals and humans. For instance, the human ear grown on a mouse. The cyborg as hybrid, is able to identify with both humans and animals. Furthermore, Haraway argues for the critical politics of humans recognizing their companionship with non-human species.

The second boundary breakdown is between living organism and machine. Haraway points out how earlier machines, ‘were not self-moving, self-designing, autonomous’. Computer assisted design, artificial intelligence and robotics had – by the late twentieth century however had collapsed the distinction between natural and artificial, mind and body, self-developing and externally designed. The capabilities of technology begin to mimic our personalities and surpass our abilities so that, as Haraway comments, ‘our machines are disturbingly lively, and we ourselves frighteningly inert.’ Technological determinism does not necessarily guarantee the ‘destruction of ‘man’ by the ‘machine’ but rather as cyborgs our amalgamation with machines ensure our survival. Intelligent machines do not obliterate the human, the enhance, alter and transform them.

The third breakdown is between the physical and non-physical, material and immaterial, or real and virtual. This breakdown is evident in the ubiquity of microprocessors in contemporary life. The miniaturised nature of digital chips change our understanding of what a machine is. The microprocessor does not create objects as such, they are ‘nothing but signals, electromagnetic waves, a section of a spectrum, and these machines are eminently portable, mobile.’ Haraway argues then that, ‘a cyborg world is about the final imposition of a grid of control on the planet…From another perspective, a cyborg world might be about lived social and bodily realities in which people are not afraid of their joint kinship with animals and machines, not afraid of permanently partial identities and contradictory standpoints.’

A cyborg world is one where bodies are integrated into digital circuits in technical and cultural ways. In this process, it is no longer clear ‘who makes and who is made in the relation between human and machine’, … ‘no longer clear what it mind and what body in machines that resolve into coding practices’. The distinction between machine and organism, of technical and organic becomes impractical, and perhaps even undesirable, to attempt. The embodied experience of those of us who live in today’s integrated digital circuits of smartphones, smart homes and biotechnologies know nothing other than a life lived within technocultural atmospheres sustained in part by the weaving of life into digital processors. We cannot leave them behind, we are posthuman in the sense that we are now knitted together with our artificial life support systems. That’s what a posthuman technoculture is. If we are cyborgs – part biology, part machine – then our bodies are the site where the power of digital media to engineer life operates. The body is the touchpoint between life itself and the power of digital technologies to shape life. The body is the interface where power expands, and where it might be jammed or rerouted.

Technocultural bodies

Our bodies are becoming, in the words of sociologist Gavin Smith, ‘walking sensor platforms’. Our bodies increasingly host devices that translate life into data. This process is at the heart of technocultural capitalism. If we look carefully we can discern in many Silicon Valley investments the effort to engineer away the friction between living bodies and the capacity of platforms to translate life into data, calculate and intervene.

To understand media platforms as technocultural projects then, we need to trace all the ways in which our living bodies are entangled with them. We need to investigate the sensory touchpoints between biology and hardware, between living flesh and digital processing. The expansion of the sensory capacities of media and the affective capacities of the body depend on a range of ‘communicative prostheses’ that envelop, are attached to, or even implanted in, our living bodies.

We can see this in efforts to engineer bio-technologies like augmented reality, neural lace, digital prosthetics and cortically-coupled vision. These technologies aim to change how the body experiences reality, expands the embodied capacity to act and pay attention, and the biological composition of the body itself.

Just listen to how Silicon Valley technologists talk about the relations between our bodies, brains and their digital devices.

A technology like augmented or mixed reality, according to Kevin Kelly, ‘exploits peculiarities in our senses. It effectively hacks the human brain in dozens of ways to create what can be called a chain of persuasion’. The perception of reality, once confined to the fleshy body, becomes an experience partly constructed by the brain and partly by digital technology.

Magic Leaps’ founder Rony Abovitz explains that mixed reality is ‘the most advanced technology in the world where humans are still an integral part of the hardware… (it is) a symbiont technology, part machine, part flesh.’ This part machine, part flesh vision has a long history in culture and technology. To think of the human is to pay attention to the process through which a living species entangles itself with non-human technologies, from early tools onwards. Since Mary Shelley’s Frankenstein, at least, our cultural imagination has thought about the possibility of technologies that might transform our living biology. Technologies are emerging that seem to be doing just this.

Research scientists have prototyped a robotic arm that can be controlled with thoughts alone. A person has an implant in their brain that detects neural activity, and then trains a computer to drive an arm and hand to undertake increasingly fine motor skills. Recently, Facebook have experimented with a similar technology that enables a human to type out words just by thinking them.

Over the past decade, researchers have been experimenting with cortically-coupled vision. The basic idea is that computers learn from the visual system in our brain, tracking how the brain efficiently processes huge amounts of visual data. This technology could be used to train computers to process vision like humans can, or it could be used to learn patterns of human attention. For instance, learning what kinds of things particular humans enjoy looking at. Imagine if, as you walk down a street, a biometric media technology gradually learns what kinds of things attract your attention, give you pleasure or irritate you.

Elon Musk is one of several technologists to invest in Neural Lace, an emergent – some say technically improbable – idea. The basic objective is to create a direct interface between computers and the human brain, which may involve implanting an ultra-find digital mesh that grows into the organic structure of the brain, directly translating neural activity into digital data. In an experiment with implanting neural lace in mice, researchers found that ‘The most amazing part about the mesh is that the mouse brain cells grew around it, forming connections with the wires, essentially welcoming a mechanical component into a biochemical system.’

Musk has said that, 'Over time I think we will probably see a closer merger of biological intelligence and digital intelligence.' The brain computer interface is mostly constrained by bandwidth ‘the speed of the connection between your brain and the digital version of yourself, particularly output.’ Let’s pause there for a second, the bandwith observation alerts us to something important. Maybe we could say the biggest engineering challenge for companies like Google, Facebook, Amazon and so on is the bottleneck at the interface between the human brain and the digital processor. All our methods of translating human intelligence – in all its sublime creativity, open-endedness and efficiency – into digital information are currently hampered by the clunky devices we have that sit at the interfacebetween body and computer: keyboards, mouses, touchscreens, augmented reality lenses. This is the truly wicked problem, perhaps whoever solves it will become the next major media platform. Just as Facebook, Amazon and Google have disrupted mass media, the next disruption will centre around whoever can code the human body and consciousness into the computer.

In each of these cases we can see a technocultural process through which media platforms, technologists and researchers invest in engineering the interface between the living body and non-human digital processors. This process is transforming what it means to be human.

It becomes increasingly difficult, or even pointless, to attempt to understand the human as somehow distinct from the technocultural atmospheres we create to sustain our existence. Living bodies are becoming coextensive with digital media.

Media platforms become like bodies, bodies become like media. In one direction we have the expansion of the sensory capacities of media. That is, media become more able to do things that once only bodies could do. Media technologies can sense and respond to bodies in a range of ways: know our heart rate, predict our mood, track our movement, identify us via biometric impressions like voice or fingerprint. And, in the other direction, we have bodies becoming coextensive with media technologies. Machines are becoming prostheses of the body, and in the process changing what a body is and does. Digital technologies alter how we we perform, imagine, experience, and manage our bodies.

In the technocultures we call home, our bodies are cyborgs: composed of organic biologicalmatter and machines. Our glasses, hearing aids, prosthetics, watches, and smartphones are all machines we attach to our bodies to enable them to function in the complex technocultures we inhabit. Many of these devices are now sensors attached to digital media platforms. Our smartphone is loaded with sensors that enable platforms to ‘know’ our bodies: voice processors, ambient light meters, accelerometers, gyroscopes, GPS. All of these sensors in various ways collect data about our bodies – their expressions, their movements in time and space, their mood and physical health.

Beyond the smartphone many of us attach smart watches and digital self-trackers to our bodies. We use these devices to know, reflect upon, judge and manage our embodied experience. Following the steady stream of prototypes from Silicon Valley we can see a future where devices might be integrated or implanted into the body. Sony recently patented a smart contact lens that records and plays back video. The lens would see and record what you see, and then using biometric data select and play back to you scenes from your everyday life. The lens could, augment your view of the material world around you, or even take over your vision to immerse you in a virtual reality. With a lens like this vision can no longer be seen as a strictly biological and embodied process, it becomes an experience co-constructed by intelligent machines.

Engineering augmented reality

Following the debate about Confederate statues and monuments in the US during August 2017, the radical Catholic priest Fr Bob Maguire tweeted, ‘Could we not have Virtual statues which the algorithm could change as directed by public opinion?’

I like this Tweet a lot. Fr Bob makes an incisive observation about the logic and politics of augmented reality – at least as its imagined by the major media platforms. Platforms like Facebook and Google are investing in virtual, augmented and mixed reality technologies. And, as with most of their engineering projects, encoded into these technologies is a disruptive vision for public life.

Fr Bob cheekily skewers this Silicon Valley logic in a bunch of ways.

He’s aping the Silicon Valley liberal-individualist solution to everything. Forget the difficult debate about history and identity that surrounds these monuments, just measure public opinion and produce a representation of reality that matches that opinion. Forget being caught in history, just have a culture that continuously and automatically remodels itself on whatever the current tastes and preferences of the crowd are.

But, there’s another way to read Fr Bob’s quip. I think, that in the vision of augmented reality being imagined by Google and Facebook, the ideal scenario would be that we all individually wear our augmented reality lenses and see the reality we want to see.

As long as we all have our Facebook goggles or Google lenses in, when we go into the park and look at a big statue we will see our own personal hero. White Nationalists will see Robert E. Lee, progressives will look at the same spot, and see someone else – Oprah, Obama, Martin Luther-King, Tina Fey eating cake.

The point is this, augmented reality – as envisioned by Facebook and Google – is the engineering effort to take the forms of algorithmic culture currently confined to the feeds of our smartphones and transpose them into the real world. If at the moment, when we scroll Facebook we see the news that matches our political viewpoints. If we’re alt-right, we’re immersed in ‘fake news’ conspiracies about violent leftists, if we’re progressive we’re immersed in outrage about Nazis and the KKK. Augmented reality would weave those simulations into the real world.

So, our public space begins to reflect back to us our political identities.

Is that what we want?

Here we encounter a dilemma. On the one hand if we all saw the statue we wanted to see, would that mean everyone would be happy? Or, would it simply mask the real divisions which the debate over the monuments stands in for? Or, does the presence – or absence – of statues and monuments we disagree with in public space function as an important and constitutive aspect of public life? That a foundational characteristic of public life is to encounter and contend with ideas and people we disagree with, that are other or alien to us?

This is my provocation: we need to see the present effort to engineer virtual, augmented and mixed reality by Facebook, Google and Snapchat as an extension of the simulation-based, predictive and algorithmic culture they have been constructing over the past decade.

We can roughly sketch the history of virtual and augmented reality has three periods.

From the 1960s to the 1980s the US military investment invest in the development of virtual environments and simulators that could train pilots.

From the 1980s through to the mid-1990s dreams of virtual reality move beyond the military, Silicon Valley tech-utopian developers, counter-cultural activists and artists begin to imagine virtual realities unhooked from the impediments of the material world and its flesh and steel.

From the mid-90s virtual reality technologies, and the dreams about them, went into a kind of hibernation.

This hibernation came about because the dreams of a utopian and independent virtual world or cyberspace couldn’t be technically or politically realised. In a technical sense, low-res displays, latency, motion sickness, large and heavy hardware, lack of wireless connections, no mobile internet, and a lack of interplay with social life and urban space all stalled virtual reality start ups. Then, over the past five years firms like Oculus Rift and Magic Leap, acquired by Facebook and Google respectively, have been ushering in a new era of virtual reality hype. In the present moment there are three kinds of projects: virtual reality, augmented reality and mixed reality.

Virtual reality is characterised by opaque goggles. Once you are wearing them, you are in an immersive virtual world. Think of virtual reality gaming. Augmented and mixed reality are characterised by translucent screens or glasses. As you wear them digital simulations are overlaid with your view of the real world. Augmented reality is most evident in our everyday use of Snapchat lenses or filters. Via the screen we see our face overlaid with digital simulations: whiskers, a tiara, a rainbow tongue. Mixed reality is the prototyped ambition of Google’s Magic Leap. The limitation of augmented reality is that digital simulations are simply overlaid the vision of the real world, the simulations can not be made to appear like they are interacting with the world.

Magic Leap are working toward building a mixed reality technology where simulations will appear to be able to interact with the world. For example, you’ll hold out your hand and a simulation of an elephant will walk around your palm. It will appear to know where your hand begins and ends. The comparison between Magic Leap and Snapchat is a useful one. Magic Leap promote a vision of mixed reality that seems to be just out of reach. Incredible. But, in the future. Snapchat, while not as technologically-sophisticated, is perhaps more culturally significant. With Snapchat, augmented reality is becoming a part of everyday communication rituals. And, Snapchat are figuring out how to monetise augmented reality by selling it to brands. The major investments by Facebook, Google and Snapchat in these technologies indicate to us how serious they are in transforming their core platform architecture, pushing it beyond the smartphone and its flows of images on an opaque screen.

Media platforms like Google and Facebook are multi-dimensional engineering projects. Facebook’s Chief Operating Officer Sheryl Sandberg explained at Recode in 2016 that while the current business plan focussed on monetisation and optimisation of the existing platform. Their ten year strategic plan is focussed on ‘core technology investments’ that will transform the platform infrastructure. The developments keep coming. In August 2017, Facebook lodged a patent in the US for augmented reality glasses that could be used in a virtual reality, augmented reality or mixed reality system. Via translucent glasses or lenses, we can begin to see how Facebook could be transition to an augmented reality platform.

Here’s the critical point. These media platforms and partnering brands are not investing in the creation of more sophisticated mechanisms of symbolic persuasion. They are investing in the design of devices and infrastructure that can track and respond to users and their bodies in expanding logistical and sensory registers. Virtual reality projects are one instance of this, the effort to create a form of media that works not by creating symbols but by engineering experience. These companies are attempting to, as Jeremy Packer puts it, ‘code the human into the apparatus’.

Facebook, Google, Apple, Amazon, Microsoft, Sony and Samsung all have major investments in artificial reality. Facebook has 400 AR engineers. Silicon Valley has about 230 hardware and software engineering companies working on VR. Mark Zuckerberg echoes Silicon Valley consensus when he says it is ‘pretty clear’ that soon we will have glasses or contact lenses that augment our view of reality. Media platforms will augment human vision with digital simulations. Imagine looking at a room full of people and seeing their names above their heads, or a reading of their mood or level of interest in what you are saying. If you’re in class, your lecturer or tutor might be able to see the grade of your latest assessment floating above your head, or a colour coding that indicates your level of engagement in the course based on your attendance at class, logins to the learning platform, and grades. The data is available to do this: your university knows your attendance, grades and engagement with software, Google and Facebook can recognise your face.

Augmented reality heralds a shift from media that engineer flows of information to media that engineer experience. The value of mixed or virtual reality firms like Oculus Rift and Magic Leap is attributable in part to their claimed capacity to ‘hack’ or ‘simulate’ the human visual cortex directly. The ‘vomit problem’ or ‘motion sickness’ caused by VR devices is a container term for a number of points of ‘friction’ between the living body and the media device. The latency of the image on the screen inches from your eyes causes a conflict between your visual and vestibular system and you vomit. This problem has also been called ‘simulator sickness’, a term that had a particular currency in the 1980s and 1990s with military training simulators. Military researchers found that motion sickness from VR subsides in experienced users. An indication of the capacity of the living body to learn to ‘hack around’ the visual-vestibular conflict, to accommodate itself – in neurological ways – to the media device it is entangled with.

The VR hype-industry is characterised by plenty of claims to hack the body, or if not hacking then working around, reorienting, calibrating, or tricking it. Kevin Kelly explains that artificial reality ‘hacks the human brain’ to create a ‘chain of persuasion’. The term a ‘chain of persuasion’ – common in VR development – strikes me as an augmented kind of ideological control. Not persuading the subject only via a symbolic account of reality they interpret, but engineering an experience where the body feels present in a particular reality as a pre-cursor to them finding representations persuasive. AR’s account is persuasive not because the human subject ‘makes sense’ of it, but because it affects both the body’s biological system and the subject’s cultural repertoire in a way that feels real.

Magic Leap’s founder Rony Abovitz puts it this way:

VR is the most advanced technology in the world where humans are still an integral part of the hardware. To function properly, VR and MR must use biological circuits as well as silicon chips. The sense of presence you feel in these headsets is created not by the screen but by your neurology… artificial reality is a symbiont technology, part machine, part flesh.

The political economy of these media engineering projects is something like this: where the profits of broadcast media – their fabled ‘rivers of gold’ – were invested in quality content, the profits of media platforms like Google and Facebook are invested in engineering projects.

The vomit problem then is a metaphor for the creative experimentation happening at the ‘touchpoint’ between living bodies and media infrastructure.

We might ask then: how will the ‘experience’ and ‘presence’ of mixed reality will be monetized? Google dramatized some of these applications when they were experimenting with Glass. As we look down a city street icons will appear above buildings the media platform predicts we might be attracted to because they sell our favourite beer or coffee, have good reviews, have a product it knows we are looking for, or that our friend is in there.

Or, perhaps stranger, a platform like Tinder, knowing our preferences for particular kinds of bodies, might be able sort and rank clubs in a nightlife precinct relative to our cultural tastes and sexual desires. You walk down a street with an AR device on, it registers affective and physiological responses to people who walk by you. It scans those people: their bodies, faces, clothes and associates them with a register of cultural and consumer tastes. And, then uses that to incrementally direct your paths through a city, a media platform, a market.

The critique of the political economy of social media has focused mostly on the capacity of platforms to conduct surveillance and target advertisements. But, as Jeremy Packer puts it advertisers now ‘experiment with reality’, engineer systems that configure cultural life by collecting, storing and processing data, rather than with ideological narratives.

As the smartphone and its modes of judgment, curation and coding give way to a mixed reality headset, the productive labour of the user will take on new dimensions. The embodied work of tuning the interface between body and lens. The combined neurological and cultural activity of adjusting how we experience reality: from a clear distinction between reality and digital image, to being immersed in a mixed simulation. From persuasion only at the symbolic level, to persuasion also at the affective and biological level.

But also, the work of tuning the predictive simulations of mixed reality via sensory and behavioural feedback. When I look down a street and it makes judgments about where I might want to go, my behavioural, physiological or affective responses to those predictions will inform future classifications and predictions as much as any symbolic content I generate. Here my bodily reactions feed not just the optimisation of a flow of symbols, but the tuning of a calculative device and platform into my lived experience. And in doing so, enable media to engineer logics of control beyond the symbolic: to the affective and logistical.

For all the work audiences did watching television in the twentieth century; that work didn’t change the medium or infrastructure of television itself all that much. But, I think we are moving into an era where the human user is an active contributor to the engineering of media infrastructure itself. And, a critical account of audience exploitation and alienation needs to engage with that.

The ‘vomit problem’ is a useful way of thinking about not just the work of engineers, but also of users who harmonise their lives and bodies with the calculations of media. The engineer works to solve the vomit problem via the ongoing, strategic design of software and hardware. As Packer puts it, media engineering involves strategically addressing problems to optimise the human-technical relationship. The user works to solve the vomit problem too: adapting their bodily physiology, appearance and performances as they move about the world; and, providing embodied feedback via their physiological and affective responses. Here the ordinary user undertakes the productive work of rolling media infrastructure into the material world, onto the living body and through lived experience.

Make your own reality

I hesitate to do this because there’s a lot of people on Twitter these days tweeting very grim predictions. But, here goes. This is a thread posted by Justin Hendrix, the director of the NYC Media Lab in June 2017. He plays out a scenario here that makes clear the political stakes of the difference between representation and simulation.

Trust in the media is extremely low right now, but I think it may have a lot further to go, driven by new technologies. In the next few years technologies for simulating images, video and audio will emerge that will flood the internet with artificial content. Quite a lot of it will be very difficult to discern- photorealistic simulations. Combined with microtargeting, it will be very powerful. After a few high profile hoaxes, the public will get the message- none of what we see can be trusted. It might all be doctored in some way. Researchers will race to produce technologies for verification, but will not be able to keep up with the flood of machine generated content. The platforms will attempt to solve the problem, but it will be vexing. Some governments will look for ways to arbitrate what is real. The only way out of this now is to spend as much time trying to understand the externalities of these technologies as we do creating them. But this will not happen, because there is no market mechanism for it. Practically no one has anything to gain from solving these problems.

OK, so Hendrix is one of many who understand Trump, rightly I think, as a symptom of a media culture characterised by what Mark Andrejevic calls ‘infoglut’. The constant flood of views, opinions, theories and images amounts to a kind of disinformation. It becomes harder for us to mediate a shared reality that corresponds with lived experience, that coheres with history or that is jointly understood. In a situation of infoglut actors will emerge, like Putin and Trump, who will thrive on information/disinformation overload.

Hendrix’s grim warning illuminates is what is lost when representation gives way to simulation. A media culture organised around the logic of representation is one in which words and images denote or depict objects, people and events that actually exist in the material world. A media culture organised around the logic of simulation is one in which words and images can be experienced as real, even where there is no corresponding thing the sign refers to in the ‘real world’ or outside the simulation itself.

This is what Hendrix forewarns, the creation of artificially intelligent bots that can produce statements, images and videos that a human experiences as real. His point is this, as non-human actors like artificially-intelligent bots begin to participate in our public discourse they have a corrosive effect on the process through which create a shared understanding of reality.

If we can’t be sure that something we see or read in ‘the media’ is even said by a human, we begin to lose trust in the very idea of using media to understand the world at all. We become reflexively cynical and sceptical about the very character of representation. If we begin to live in a world where we cannot even tell if a human is speaking, then what we lose is the capacity to make human judgments about the quality of representation.

Representation itself begins to break down.

A month after Hendrix’s prediction, computer scientists at the University of Washington reported that they had produced an AI that could create an extremely ‘believable’ video of Barack Obama appearing to say things he had said in another context. OK, so they are not yet at the point of creating a video where Obama says things he has not said, but they’re getting close.

This is how they described their study.

Given audio of President Barack Obama, we synthesize a high quality video of him speaking with accurate lip sync, composited into a target video clip. Trained on many hours of his weekly address footage, a recurrent neural network learns the mapping from raw audio features to mouth shapes. Given the mouth shape at each time instant, we synthesize high quality mouth texture, and composite it with proper 3D pose matching to change what he appears to be saying in a target video to match the input audio track. Our approach produces photorealistic results.

So, Hendrix is right it seems. We are entering an era where a neural network could produce video of someone saying something they never said. And we, as a human, would be unable to tell. If this kind of artificially constructed speech becomes widespread then the consequence is a dramatic unravelling of the socially-constructed institution of representation. In short, a falling apart of the process through which we as humans go about creating shared understanding of the world.

If the ‘industrial’ media culture of the twentieth century exercised power via its mass reproduction of imagery, then the ‘digital’ media culture of today is learning to exercise power via simulation. To make a rough distinction, we might say if television was culturally powerful in part because of its capacity to reproduce and circulate images through vast populations, then the power of digital media is different in part because of its capacity to use data-driven non-human machines to create, customise and modulate images and information.

Here’s the thing. Simulation is both a cultural and a technical condition.